This is what gets me about the discussion of AI. People are anthropomorphizing it as if it thinks for itself. It doesn’t think. It doesn’t have consciousness. It’s not human like. It has zero emotional intelligence. It learns through data and pattern recognition. So its capabilities are limited to the completeness and quality of the data it trained on.Long story short, we don't know, like at all. We can't qualify one iota of the human existence. One thing everyone always seems to get wrong regarding the robot workers they think will replace them is that it will require "terminator" like full locomotion. Not true at all. If there is no need for bi-pedal locomotion or opposable thumb type dexterity it will not be implemented in said robot.

-

Be sure to read this post! Beware of scammers. https://www.indianagunowners.com/threads/classifieds-new-online-payment-guidelines-rules-paypal-venmo-zelle-etc.511734/

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI, Great Friend or Dangerous Foe?

- Thread starter Ingomike

- Start date

The #1 community for Gun Owners in Indiana

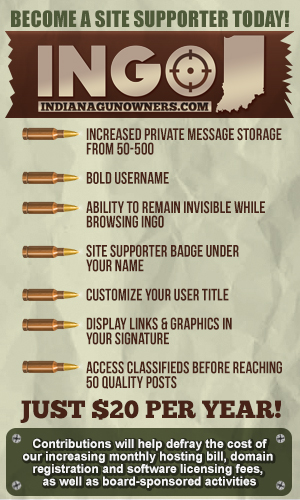

Member Benefits:

Fewer Ads! Discuss all aspects of firearm ownership Discuss anti-gun legislation Buy, sell, and trade in the classified section Chat with Local gun shops, ranges, trainers & other businesses Discover free outdoor shooting areas View up to date on firearm-related events Share photos & video with other members ...and so much more!

Member Benefits:

It doesn’t think. It doesn’t have consciousness. It’s not human like. It has zero emotional intelligence.

I’m not sure if this is what you’re saying… more data doesn’t mean more bias unless the more data includes bias. The best training data is the most diverse, quality data.That would at most be a side effect. The more data you curate for a model, the more biased it becomes. Some models, like voice conversion models, the more data you curate the better the result will likely be. Large language data models, like the ChatGPT and such, the opposite is true as the more biased you make it, the less useful is it. The primary reason for censorship is Divide and Conquer.

Let’s say you’re a software engineer and you’re working with a fairly new code library. You want to know how to do a certain thing with it. If the training data for the AI were exclusively from stackoverflow, the AI would produce bad advice about as often as stackoverflow does.

Bottom line is, AI is only as accurate as the quality of data it trains on. Another example, if it only trained on official narratives of covid, it would tell tell you to follow the science, where TheScience™ is prescribed by people who stood to make a lot of money from it.

Members online

- NHT3

- Luke.Schlatter

- glock addict

- Trapper Jim

- Lwright

- pewpewINpewpew

- jsharmon7

- drgnrobo

- Vbob1

- bocefus78

- edwea

- lowriderjim

- flint stonez

- knutty

- morve80

- Demoderb1989

- gassprint1

- Dog1

- mrproc1

- Limpy88

- Pmp

- deo62

- j706

- Capvideo

- SmileDocHill

- sadclownwp

- dweezil

- Malware

- jsx1043

- AKALLDAY

- El Conquistador

- eric001

- 223 Gunner

- model1994

- SEH0521

- 1nderbeard

- wayneduke

- WhiskE TangO

- rala

- Ark

- indiucky

- KMaC

- yogiibare

- Magneto

- KG1

- wwdkd

- Indye41

- Rescue912

- three50seven

- phylodog

Total: 7,472 (members: 246, guests: 7,226)